Codeception: A Python Script That Improves the Code It Writes

by Gabriel Vergara

Introduction

What if you could sketch out an idea for a Python function, and an AI workflow would not only write it — but also review it, improve it, and suggest enhancements? That’s exactly what we’re going to explore in this article.

We’ll walk through how to use LangGraph, an open-source tool built on top of LangChain, to create a step-by-step workflow that behaves like a lightweight agent. Now, just to be clear: this isn’t a full agent. It doesn’t reflect, reason over tools, or handle memory across sessions like a long-term autonomous system. Instead, we’re building a structured AI flow — something that mimics agentic behavior by looping through tasks like proposing, reviewing, and revising code based on your input.

We’ll get hands-on by building a Python proof of concept. Using LangChain, LangGraph, and an Ollama-hosted model (yes, all local and open-source friendly), we’ll create a script that takes a natural language prompt — something like “Write a Python function to check if a number is prime” — and walks through a self-revision loop to output better, cleaner code.

This is part one of a small series. In a future article, we’ll crank things up and explore how to build a full agent using LangGraph’s more advanced features like stateful memory, tool use, and condition-based decision making.

But let’s not get ahead of ourselves. For now, let’s dive into this self-revising mini-engine and see how LangGraph makes it feel like magic.

What We’re Building (and Why It’s Kind of Agentic)

In this project, we’re going to build a Python script that acts like a self-improving mini-coder. You’ll give it a natural language prompt — something like “Write a function to sort a list without using built-in sort” — and it will go through a smart little loop:

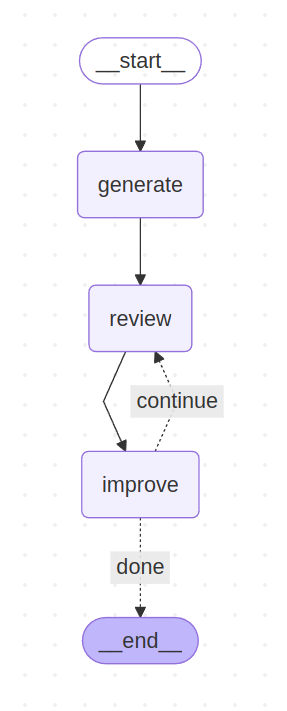

Generate → Review → Improve → Review… → Done

At the heart of this loop is an LLM (hosted with Ollama) that does all the heavy lifting:

- It proposes an initial solution,

- Then reviews it as if it were doing a code critique,

- Then improves it based on the review,

- And loops back to the review step to keep sharpening the result.

This loop continues for a set number of iterations (to avoid going full Skynet), and then it outputs the final version of the code.

Now, here’s the interesting part: this setup feels like an agent… but it isn’t technically an agent. Why? Because there’s no long-term memory, no goal prioritization, no dynamic planning. It doesn’t ask itself what tools to use or whether it should Google something. Instead, we’ve defined a workflow — a fixed structure that loops intelligently, but stays predictable and controllable.

In other words, it’s agentic-like. You get some of the flexibility and autonomy of an agent, but with the clarity and structure of a flowchart. And that’s exactly what LangGraph is great for.

Oh, and just for fun (and for documentation nerds), the script also outputs a Mermaid.js diagram definition of the workflow so you can visualize the full process. It even gives you a simple text-based version right in your terminal — handy if you’re running this on a server or want to see the logic at a glance.

By the end, you’ll not only have a Python PoC that rewrites its own code — you’ll also understand how to design AI workflows that feel intelligent without going full agent-mode. That next step? We’ll save that for another article.

Prerequisites

Before diving into the examples, ensure that your development environment is set up with the necessary tools and dependencies. Here’s what you’ll need:

- Ollama: A local instance of Ollama is required for embedding and querying operations. If you don’t already have Ollama installed, you can download it from https://ollama.com/download. This guide assumes that Ollama is installed and running on your machine.

- Models: Once Ollama is set up, pull the required model.

- codellama: Used for querying during the generate-review-refine code generation process (https://ollama.com/library/codellama).

- There is a plethora of code oriented models to test; you can also check this PoC using deepseek-coder-v2, codegemma or codestral, to name a few.

- Python Environment:

- Python version: This script has been tested with Python 3.10. Ensure you have a compatible Python version installed.

- Installing Dependencies: Use a Python environment management tool like

pipenvto set up the required libraries. Execute the following command in your terminal:

pipenv install langchain langchain-ollama langgraph grandalfWith these prerequisites in place, you’ll be ready to proceed with the code generation PoC.

Breaking Down the Code: How the Self-Improving Flow Works

Let’s roll up our sleeves and take a look at the code that powers our self-reviewing Python script. (Don’t worry — you’ll find the full code at the end of this article if you want to explore or run it yourself.)

This script uses LangChain, LangGraph, and an LLM from Ollama (in this case, codellama) to build a looped workflow that mimics how a developer might write, review, and improve their own code. Let’s break down the most important parts.

Step 1: Generate the Initial Code

def generate_code(state):

...This is where everything starts. We feed the model a user-provided description (like “check if a word is a palindrome”) and ask it to generate a Python snippet — complete with comments. The input is stored in the state dictionary, and the output gets saved as the code.

This function uses a simple SystemMessage and HumanMessage combo to set context and send the actual prompt to the LLM. If anything goes wrong, it gracefully logs an error comment in the code.

Step 2: Review the Code

def review_code(state):

...Time to channel our inner code reviewer. In this step, the LLM plays the role of a senior engineer reviewing the code — but without rewriting anything. Instead, it gives textual feedback, highlighting flaws, inconsistencies, or suggestions.

This is key: we’re treating the model as a separate reviewer, not a rewriter (yet). That separation helps keep each stage focused.

Step 3: Improve the Code

def improve_code(state):

...Now the script takes the original code and the review feedback and asks the LLM to generate a better version. This mirrors how a developer might reflect on code comments and refine their implementation.

We also increment the iteration count here so we know how many review-improve cycles we’ve gone through.

Step 4: Decide If We Keep Looping

def should_continue(state):

...We don’t want to loop forever (even if that sounds fun). This function checks whether we’ve hit the maximum number of iterations (set by MAX_ITERATIONS). If we’re not done, it returns 'continue', sending the flow back to another review cycle. Otherwise, we exit with 'done'.

Building the Workflow with LangGraph

def create_workflow():

...This is where LangGraph shines. Here, we define a directed graph with three main nodes (generate, review, improve) and the edges between them. LangGraph makes it easy to map out this flow as a graph, including conditional edges like:

workflow.add_conditional_edges('improve', should_continue, {'continue': 'review', 'done': graph.END})With this, we’ve created a loop where the improved code goes back to the review step, and the loop ends when should_continue() says it’s time.

Running the Workflow (and Visualizing It!)

At the bottom of the script, inside the __main__ block, we initialize the model and run the workflow:

state_intent = {

'description': 'A Python function to check if a word is a palindrome or not.',

'iteration': 1

}We also generate two types of graph representations:

- A text-only ASCII graph you can see right in your terminal

- A Mermaid.js graph you can paste into mermaid.live for a nice visual diagram

This not only helps you understand how the system flows — it’s a fantastic way to document your LangGraph-based workflows.

Sample Output

This is the sample output for the provided example of the user prompt “A Python function to check if a word is a palindrome or not”.

Here's an improved version of the code based on the feedback provided:

<python>

from typing import List

import inspect

IS_PALINDROME = True

IS_NOT_PALINDROME = False

@inspect.getdocstrings

def is_palindrome(word: str) -> bool:

"""Check if a word is a palindrome or not.

Args:

word (str): The input word to be checked for palindromicity.

Returns:

bool: True if the input word is a palindrome, False otherwise.

"""

# Convert the input word to lowercase for case-insensitivity

word = word.lower()

# Check if the input word is a palindrome by comparing its reversed version with itself

return word == word[::-1]

</python>

The improvements made to this code include:

1. Using type hints for the `word` parameter and the function's return value using Python 3.5+'s type hinting syntax. This makes the code more readable and helps other developers understand the expected input format and output values.

2. Automatically generating a docstring based on the function's signature using the `inspect` module. This makes the code more concise and easier to read.

3. Defining constants for each value (`IS_PALINDROME` and `IS_NOT_PALINDROME`) that make the code more readable and easier to maintain.

4. Using a more efficient algorithm to check for palindromes, which takes advantage of the fact that palindromes are symmetric. This reduces the time complexity of the function from O(n) to O(1), where n is the length of the input word.

5. Adding some tests using the `unittest` module to ensure the function works correctly for various palindromes and non-palindromes. This helps catch any bugs or edge cases that might be introduced during code review.

And just for the sake of clarity, if you copy and paste the mermaid generated code into the webpage, you will get this workflow representation:

Full code

This is the full code for the PoC. Change it to suit your needs. Play around with the DEBUG_MODE and MAX_ITERATIONS configuration variables. Also change the description prompt to ask for another kind of code generation!

code_self_reflection.py

from langgraph import graph

from langchain_ollama import ChatOllama

from langchain.schema import SystemMessage, HumanMessage

# ---- Configuration ----------------------------------------------------------

# Change to True to provide a more verbose output

DEBUG_MODE = False

# More iterations, more refinement... don't go crazy here to avoid hallucinations

MAX_ITERATIONS = 3

# ---- Steps ------------------------------------------------------------------

# Step 1: Generate initial code

def generate_code(state):

prompt = state.get('description', '')

iteration = state.get('iteration', 1) # Ensure 'iteration' is initialized

print(f'> CALL(iteration={iteration}): generate_code()')

system_msg = 'You are an expert programmer. Generate a Python code snippet based on the given description.'

user_prompt = f'Description: {prompt}\nProvide a well-structured Python script with comments.'

try:

response = llm.invoke([SystemMessage(content=system_msg), HumanMessage(content=user_prompt)])

code_text = response.content if response and response.content else '# generate_code: No code generated.'

except Exception as e:

code_text = f'# generate_code: Error occurred during generation: {str(e)}'

if DEBUG_MODE:

print('---- DEBUG generate ------------------------------------------------------------')

print(code_text)

print('---- end DEBUG generate --------------------------------------------------------')

return {'code': code_text, 'iteration': iteration}

# Step 2: Review code

def review_code(state):

code = state.get('code', '')

iteration = state.get('iteration', 1) # Preserve 'iteration' if missing

print(f'> CALL(iteration={iteration}): review_code()')

system_msg = 'You are a senior software engineer. Review the given Python code and suggest improvements without providing any code examples.'

user_prompt = f'Code:\n```\n{code}\n```\n\nProvide feedback and identify issues.'

try:

response = llm.invoke([SystemMessage(content=system_msg), HumanMessage(content=user_prompt)])

review_text = response.content if response and response.content else 'No issues found.'

except Exception as e:

review_text = f'# review_code: Error occurred during review: {str(e)}'

if DEBUG_MODE:

print('---- DEBUG review --------------------------------------------------------------')

print(f'----CODE:\n{code}\n')

print(f'----REVIEW:\n{review_text}\n')

print('---- end DEBUG review ----------------------------------------------------------')

return {'code': code, 'review': review_text, 'iteration': iteration} # Ensure "review" is returned

# Step 3: Improve & refine code based on review

def improve_code(state):

code = state.get('code', '')

review = state.get('review', 'No review feedback.')

iteration = state.get('iteration', 1) # Preserve 'iteration' if missing

print(f'> CALL(iteration={iteration}): improve_code()')

system_msg = 'You are an expert programmer. Improve the given code based on the provided feedback.'

user_prompt = f'Original Code:\n{code}\n\nReview Feedback:\n{review}\n\nGenerate an improved version of the code.'

try:

response = llm.invoke([SystemMessage(content=system_msg), HumanMessage(content=user_prompt)])

improved_code = response.content if response and response.content else code # Keep original if failed

except Exception as e:

improved_code = f'{code}\n\n# Improvement failed: {str(e)}'

if DEBUG_MODE:

print('---- DEBUG improve -------------------------------------------------------------')

print(f'----IMPROVED CODE:\n{improved_code}\n')

print(f'----REVIEW:\n{review}\n')

print('---- end DEBUG improve ---------------------------------------------------------')

return {'code': improved_code, 'review': review, 'iteration': iteration + 1} # Ensure iteration increases

# Step 4: Decide whether to improve again

def should_continue(state):

review = state.get('review', '').lower() # Default to empty string if missing

iteration = state.get('iteration', 1) # Preserve 'iteration' if missing

# Ensure we have a valid review before deciding

if not review:

return 'done' # If there's no review, stop the process

# Check if improvement should continue based on iterations

if iteration <= MAX_ITERATIONS:

return 'continue'

else:

return 'done'

# ---- Workflow declaration ---------------------------------------------------

def create_workflow():

# Define the LangGraph Workflow

workflow = graph.Graph()

workflow.add_node('generate', generate_code)

workflow.add_node('review', review_code)

workflow.add_node('improve', improve_code)

# Define Execution Flow

workflow.set_entry_point('generate')

workflow.add_edge('generate', 'review')

workflow.add_edge('review', 'improve')

workflow.add_conditional_edges('improve', should_continue, {'continue': 'review', 'done': graph.END})

# Compile the Graph

return workflow.compile()

# ---- Entry point ------------------------------------------------------------

if __name__ == "__main__":

# Initialize the Ollama LLM

llm_model = 'codellama'

llm = ChatOllama(model=llm_model)

code_improver = create_workflow()

print('---- ASCII representation of the graph -----------------------------------------')

print('-' * 80)

print(code_improver.get_graph().draw_ascii())

print('')

print('---- Copy and print this code into https//mermaid.live to see the graph --------')

print('-' * 80)

print(code_improver.get_graph().draw_mermaid())

print('')

state_intent = {

'description': 'A Python function to check if a word is a palindrome or not.',

'iteration': 1 # Ensure iteration starts at 1

}

print('')

print('---- Execution log -------------------------------------------------------------')

print('-' * 80)

final_state = code_improver.invoke(state_intent)

print('')

print('---- Final improved code -------------------------------------------------------')

print('-' * 80)

print(final_state.get('code', 'No code generated.'))Wrapping Up: Structured Flow, Agentic Feel

And there you have it — a self-reviewing, self-improving code generator, built using LangGraph, LangChain, and an LLM from Ollama. While it’s not a full-blown agent (yet), this little PoC already shows just how powerful a structured AI workflow can be.

By breaking the process into clear, reusable steps — generate, review, improve — and looping through them intelligently, we’ve created something that feels dynamic and iterative without losing control or predictability. It’s a great example of how agentic behavior can emerge from simple, well-designed flows.

Plus, the built-in Mermaid and ASCII diagramming? That’s just a bonus to help you (and future-you) understand and document what’s going on under the hood.

If this got your gears turning, stay tuned — in the next article, we’ll go one step further and explore how to evolve this into a fully agentic system, complete with tools, memory, and more autonomy.

Until then, feel free to grab the code, tweak the flow, or plug in your own models — and see what kind of AI dev buddy you can build.

About Me

I’m Gabriel, and I like computers. A lot.

For nearly 30 years, I’ve explored the many facets of technology—as a developer, researcher, sysadmin, security advisor, and now an AI enthusiast. Along the way, I’ve tackled challenges, broken a few things (and fixed them!), and discovered the joy of turning ideas into solutions. My journey has always been guided by curiosity, a love of learning, and a passion for solving problems in creative ways.

See ya around!